- In workaround 1a we ensured that the query that was selecting from the mutating table, was being executed from within an autonomous transaction.

- In workaround 1b we ensured that this query was being executed via a loopback database link.

We also discussed the major difference between the two workarounds:

- 1a: the query sees the mutating table as it existed prior to the start of the main transaction.

- 1b: the query sees the mutating table as it is being mutated by the triggering DML statement. Essentially this workaround behaves as if Oracle did not have a mutating table error at all.

Apart from this, you will have planted a booby trap in your application code: your code can now behave non-deterministically.

To discuss this we will investigate how our row-trigger behaves not in the case of a single row insert, but a multi-row insert. Given our business rule, no manager can be without a clerk in the same department, a multi-row insert that inserts both the manager as well as the necessary clerk as the first two employees for a new department in one go, is a valid insert statement that our trigger-based rule-enforcement code should allow.

Let's look at the behavior of our row-trigger using workaround 1b (loopback db-link) first. Suppose we have a table called EMP_STAGE. This (staging) table is holding employee-rows that are to be inserted into the real EMP table. Table EMP_STAGE currently holds two employees: a manager and a clerk both working in department 42.

Now see what our row-trigger does.

Our row-trigger does not allow this insert. Why? Well think about it. How is the statement 'insert into EMP select * from EMP_STAGE' executed? Particularly in what order are the selected from EMP_STAGE and then inserted into EMP? Apparently in the case above the manager was the first one that was selected and inserted into EMP. Which would then fire our row-trigger, which would then see the mutating table (via the loopback db-link) and not find the required clerk in department 24. Hence our row-trigger raised the business rule error. And the multi-row insert statement never reaches inserting the second row (the clerk), it is cancelled by the raise_application_error and rolled back.

But what if the rows happened to be processed in a different order? We can force this by simply adding an order-by clause to the subquery in the insert statement (we re-execute the insert and have added 'order by empno desc'):

Our two-row insert statement now executes succesfully!

So what happened in this case? Due to the order by clause we've ensured that employee 111 (the clerk) got inserted first. The row-trigger fires, sees it's not a manager and therefore nothing needs to be done. Next employee 110 got inserted. The row-trigger fires again, sees it's a manager, and executes the business rule check by calling our procedure. This procedure now queries the EMP table (via the loopback db-link) and it actually sees (!) the clerk that just got inserted by the same statement, and therefore executes successfully.

Now the order in which rows get inserted by a multi-row insert statement is (and rightfully should be) of no concern to you. Think of the subquery instead of being a simple 'select * from emp_stage', being a complex multi-table join: depending on current contents/sizes of the tables involved, statistics might differ which in turn might cause different execution plans for the insert statement, which in turn might change the order in which rows get inserted by a multi-row insert statement. If your code is not immune to this order then in effect your code executes in a non-deterministic fashion. And this is the essence of why ORA-04091 is your friend. It protects you against developing software that might work today, and not work tomorrow.

So how does this all work with the autonomous transaction workaround? Here's the same two-row insert for that scenario, executed without and with the forced order (EMP again has no employees in department 42).

As you can see both versions of the insert statements fail with this workaround. The explanation for this is simple. Since the query that checks for the necessary clerk is run from inside an autonomous transaction, all it sees is the EMP table as it existed prior to start of the insert statement: in which there were no employees for department 42. So in this case the 'workaround' is not a workaround at all: yes it prevents the mutating table error, but no it does not allow a valid insert-statement to execute successfully. Note that it even wouldn't allow two subsequent single row-inserts: first insert a clerk, then insert a manager. With this workaround you are forced to commit the clerk first, and only then insert the manager.

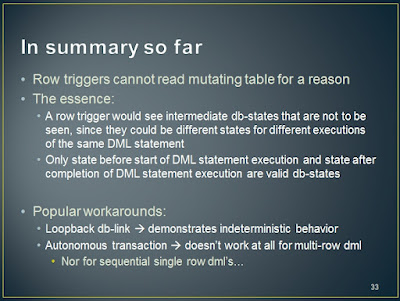

So, in summary:

Again, forget about these two red herrings: don't use them.

In the following posts we will start developing how to correctly work around the mutating table error. And as many of you probably know, it involves a combination of row and statement triggers.

In the meantime happy holidays!

Great blog!

ReplyDeletehere is a nastier variation of why the autonomous transaction doesn't work at all.

Assume there is a clerk in department 22:

INSERT INTO emp VALUES (444,'Joe','CLERK',5555,22);

COMMIT;

now, in a SINGLE transaction we delete him and add a manager:

DELETE FROM emp where empno=444;

INSERT INTO emp

VALUES(555,'Jack','MANAGER',7777,22);

COMMIT;

Voila - the data now violates the business rule... The autonomous transaction see the clerk that we deleted within the transaction...

Ofir

Ahh, yes. The other way around.

ReplyDeleteNice addition, thanks.

Toon

Any great Robustness designer ought to have a few abilities and experience that permit them to take care of their responsibilities competently. It, most importantly, is an encounter of learning Strength and working with it. It incorporates the capacity to comprehend and compose the code, track down bugs and right these mix-ups without awful outcomes. Simultaneously, working with code is only one of the significant encounters. The other important subtlety is the capacity to work with object-arranged programming and comprehend the designs of the information as well as blockchain overall. One should be know all about the advancements of moving digital money and every one of the means of working with shrewd agreements>> solidity development company

ReplyDeleteAutomatic essay writing has revolutionized the way we approach academic assignments. With the advent of advanced technology, students now have access to tools that can generate well-structured essays with ease. This time-saving solution alleviates the stress of writing, allowing individuals to focus on understanding and analyzing the subject matter, automatic essay writing is a game-changer for students seeking efficient and effective ways to excel in their academic pursuits.

ReplyDelete